In this series on AI and the small law firm, we continue to discuss how small law firms can leverage their natural advantages with generative artificial intelligence to compete at a higher level.

Table of contents

Today’s legal market is not an arms race in which the better-resourced firms can corner the market on technology. Rather, generative artificial intelligence (GenAI) gives firms of all sizes a chance to reassert the value of their lawyers’ expertise, knowledge and ability to meet the specific needs of clients and meaningfully level practice playing fields.

Further, artificial intelligence itself doesn’t replace lawyers’ expertise. Rather, it provides a platform for lawyers to leverage and extend their domain knowledge, enabling them to bring higher levels of productivity and service to the benefit of the client and firm alike.

In short, GenAI presents unprecedented opportunities for small law firms to compete.

Human Expertise Is Critical to the Success of AI

It’s common to hear concerns that GenAI and other technologies will cost lawyers jobs or diminish the value of their work. While AI might entail a shift of some specific tasks from humans to machines, the idea that AI will diminish the contributions of human lawyers could not be further from the truth. Indeed, the opposite is true: GenAI requires legal domain expertise and skills to function correctly.

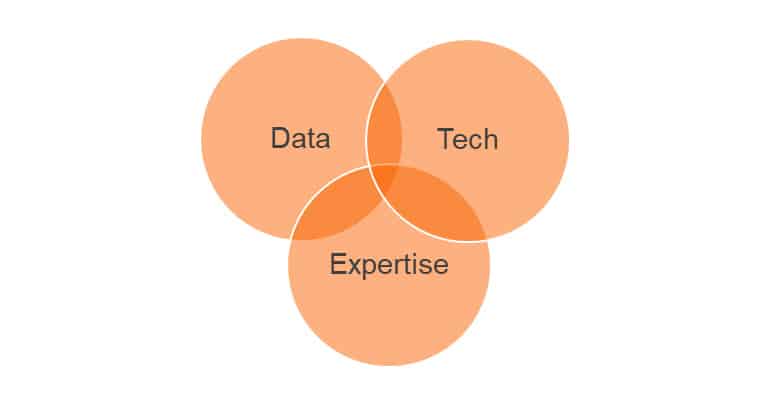

AI is not just a technology but a fusion of three essential resources: relevant data sets, technology tailored for specific applications, and the human expertise guiding its practical use and informing training and error analysis.

AI won’t work in a specialized domain like the legal system unless all three of these components are present — none of them alone is more important than the other two.

Lawyer-Generated Data

In a highly specialized domain like legal, extensive sets of accurate and updated data such as statutes, regulations and case laws are necessary to train the machine-learning algorithms of the technology. Moreover, without lawyer-generated data such as contracts, memoranda and prior work product, reliable outcomes from AI tools would be unattainable.

Technology

AI technology combines data with the tools built by expert developers who combine analytical and problem-solving skills with a solid understanding of the target domains.

Legal Domain Experts

In the final component, legal experts guide AI training, verify algorithm performance, and are central to error rectification. They bridge the gap between tech professionals and domain-specific applications. Only lawyers think like lawyers; their perspective is crucial to ensuring AI works correctly within the legal practice.

What Happens When One Part Is Missing?

“Garbage in, garbage out” is certainly one of the most time-tested rules regarding data and technology, and it’s certainly having a moment amid popular early impressions of GenAI’s capabilities.

The widespread availability of early forms of the public-facing GenAI tool ChatGPT was useful because anyone could test it out, encouraging experimentation and driving initial innovation. Lawyers testing ChatGPT soon found that it could create legal documents that sounded very authoritative. However, they also found out that reliance on them could have real consequences.

The openly available versions of GenAI are really just sentence-completion engines trained on an unfiltered base of data from the internet. ChatGPT has no intelligence of its own; rather, it’s good at drafting language that sounds like a plausible response to a user’s prompt.

Because of the lack of native intelligence, ChatGPT may sound like a lawyer, but it doesn’t deliver the accuracy and precision that lawyers require. It can and has cited nonexistent sources and invented facts. These examples are called hallucinations and include the now-infamous case in which a New York lawyer submitted a brief supporting a motion that included citations of non-existent cases and other legal inaccuracies — but it sounded good. He had simply asked ChatGPT to write his brief, with disastrous results.

ChatGPT doesn’t have the data training or domain expertise to be reliable. As a result, it can very easily introduce errors into its responses. It simply doesn’t know better.

The possibility of such errors presents an unacceptable level of risk for legal professionals. So how will lawyers, especially those at smaller firms, effectively use GenAI while maintaining all their client and ethical obligations?

Authoritative Legal Data Is the Backbone of GenAI

Beyond just selecting the words for a response, technology also provides part of the answer for how lawyers can effectively use GenAI. Retrieval Augmented Generation (RAG) is one method designed to improve the response quality in GenAI systems. In products that use RAG, the user’s prompts or queries do not pass directly through to the underlying Large Language Model (LLM). First, the question runs as a search against a trusted body of content — for example, verified legal content from a legal publisher or trusted documents from the user’s organization.

Documents relevant to the question are retrieved first, and the question and the verified content are passed on to the LLM for processing. This ensures that the answers to users’ queries are grounded in trusted domain-specific data, not just a random sample of data (however large) from the wider internet.

The idea that legal GenAI means turning over legal decision-making to a machine overlooks the importance of the data portion of the Venn diagram above. A properly trained and targeted GenAI application for legal use will build on the work of lawyers in the form of cases, statutes, briefs and memoranda, how-to guides, contracts, client advisories, templates — all the esoteric and robust data that results from lawyers’ expertise and knowledge.

All law firms, even smaller ones, have an opportunity to leverage their data assets against competitors at scale once their lawyers identify and curate the appropriate critical data sets from within their firm and embed them within the AI solution. This proprietary data can be combined with larger pools of data from authoritative external sources like trusted legal content sources to strengthen further the GenAI tool’s responses.

Legal Domain Expertise Is Vital for Success

Lawyers provide a layer of trust between clients and the systems that generate their various legal deliverables. Clients rely on lawyers’ domain expertise to ensure the right tools are used for their legal matters, with the best results. After all, clients hire lawyers because they want trusted experts representing them.

The recent Thomson Reuters Future of Professionals Report surveyed lawyers and accounting professionals about their concerns and expectations around the role of AI in their work. One of the report’s primary conclusions was: “As an industry, the biggest investment we will make is trust.” Trust is the legal advisor’s stock in trade; tools and technology can enhance productivity and generate new types of service offerings, but in the end, it’s the legal expertise of human lawyers that creates value upon which clients rely.

That layer of trust has several dimensions, including:

- Accuracy. Is the machine producing the correct result? Does the lawyer understand how the machine works and knows how to evaluate its accuracy?

- Ethics. Is the lawyer accountable for the work product? Is the lawyer fully representing the client’s interests and using the best tools for the job?

- Security and confidentiality. Is the lawyer protecting client data?

- Value. Is the lawyer using sound judgment in choosing where automation creates value and where direct human interaction is more valuable?

Ultimately, the value clients see in their outside firms’ legal work is not determined by the underlying tools. It’s found in the client’s confidence in lawyers’ expertise in matching the right tool and methodology to the legal task and lawyers’ accountability for the result.

By leveraging strong proprietary data sets, access to the same technology and industry data as larger firms, and deep domain expertise, coupled with the inherent trust small law firms can build with their high-touch client relationships, small law firms can use GenAI to gain access to scale and competitive abilities unheard of before in the legal industry.

This is the second in a series of blog posts about how small law firms can address the opportunities and pitfalls brought by generative artificial intelligence. Read part one of the “Generative AI and the Small Law Firm” series here.

Image © iStockPhoto.com.

Don’t miss out on our daily practice management tips. Subscribe to Attorney at Work’s free newsletter here >